Oracle-DB: Ensure uniqueness across table boundaries

Consider the following requirement:

There are two tables. Table MASTER is referenced from table DETAIL.

CREATE TABLE Master ( ID NUMBER PRIMARY KEY , Company_ID NUMBER ); CREATE TABLE Detail ( ID NUMBER GENERATED BY DEFAULT ON NULL AS IDENTITY PRIMARY KEY, Master_ID NUMBER REFERENCES Master(ID), Value NUMBER );

The content of the table DETAIL should be unique in a combination of two values: the column DETAIL.VALUE and the column MASTER.COMPANY_ID from a directly referenced table.

Each column of this tables except the primary key columns can be changed.

Limits of solution approach with function based index

A first thought when securing uniqueness across table boundaries is often the use of function based indexes with a function that selects the second value from the referenced table.

CREATE OR REPLACE FUNCTION Get_Company_ID(p_Master_ID IN NUMBER) RETURN NUMBER DETERMINISTIC IS v_Company_ID NUMBER; BEGIN SELECT Company_ID INTO v_Company_ID FROM Master WHERE ID = p_Master_ID; RETURN v_Company_ID; END Get_Company_ID; / CREATE UNIQUE INDEX IX_Value_Company_ID_Unique ON Detail(Value, Get_Company_ID(Master_ID));

The technical implementation of function based indexes requires that the underlying function must be deterministic, but DETERMINISTIC can simply be declared by a keyword.

Functions are mostly not really deterministic if the return value of the function depends on table contents.

Let's test it with a simple change that violates this precondition:

INSERT INTO Master (ID, Company_ID) VALUES (8, 7); INSERT INTO Detail (Master_ID, Value) VALUES (8, 7); -- allowed because uniqueness is not violated UPDATE Master SET Company_ID = 6 WHERE ID = 8; -- contradicts deterministic at next call of Get_Company_ID UPDATE Detail SET Value = 9 WHERE Master_ID = 8; -- Results in "ORA-08102: index key not found, obj# 79600, file 12, block 1931 (2)"

Therefore, function-based indexes are not a suitable solution for our requirements if table data may change.

This post shows how the required uniqeness can be ensured using triggers.

tl;dr: Proceed to the section "Final solution" at the end of this post.

Let's remove that function based index and create the needed trigger at table DETAIL:

After completion of a DML operation it checks if the uniqueness is till given, otherwise an exception is raised.

The pending DML operation is canceled in case of exception inside the trigger.

DROP INDEX IX_Value_Company_ID_Unique;

CREATE OR REPLACE TRIGGER Detail_Value_Company_ID_Unique

FOR INSERT OR UPDATE OF Master_ID, Value ON Detail

COMPOUND TRIGGER

AFTER STATEMENT IS

BEGIN

FOR Rec IN (SELECT d.Value, m.Company_ID

FROM Detail d

JOIN Master m ON m.ID = d.Master_ID

GROUP BY d.Value, m.Company_ID

HAVING COUNT(*) > 1

) LOOP

RAISE_APPLICATION_ERROR(-20999, 'Duplicate values found for VALUE = '||Rec.Value||', COMPANY_ID = '||Rec.Company_ID);

END LOOP;

END AFTER STATEMENT;

END;

/

Now check what happens at DML operations:

-- create some records in table MASTER INSERT INTO Master (ID, Company_ID) VALUES (1, 1); INSERT INTO Master (ID, Company_ID) VALUES (2, 1); INSERT INTO Master (ID, Company_ID) VALUES (3, 2); -- create or update records in table DETAIL INSERT INTO Detail (Master_ID, Value) VALUES (1, 1); -- allowed because uniqueness is not violated INSERT INTO Detail (Master_ID, Value) VALUES (1, 2); -- allowed because uniqueness is not violated INSERT INTO Detail (Master_ID, Value) VALUES (3, 1); -- allowed because uniqueness is not violated INSERT INTO Detail (Master_ID, Value) VALUES (2, 1); -- fails because combination of value and company_id already exists INSERT INTO Detail (Master_ID, Value) SELECT 2, 3 FROM DUAL UNION SELECT 2, 1 FROM DUAL; -- fails because second record does not meet the condition UPDATE Detail SET Master_ID = 1 WHERE Master_ID = 3 AND Value = 1; -- fails beacuse combination of value and company_id already exists

When the uniqueness condition is violated, an exception is thrown indicating the violated values. This is helpful to identify the violating records at bulk DML operations.

ORA-20999: Duplicate values found for VALUE = 1, COMPANY_ID = 1 ORA-06512: at "CUST.DETAIL_VALUE_COMPANY_ID_UNIQUE", line 11 ORA-04088: error during execution of trigger 'CUST.DETAIL_VALUE_COMPANY_ID_UNIQUE'

But what if the COMPANY_ID in table MASTER changes?

We need an additional trigger at the column MASTER.COMPANY_ID. But, in this case only for UPDATE operations.

Here possibly also the number of now violating records in table DETAIL is interesting.

CREATE OR REPLACE TRIGGER Master_Value_Company_ID_Unique

FOR UPDATE OF Company_ID ON Master

COMPOUND TRIGGER

AFTER STATEMENT IS

BEGIN

FOR Rec IN (SELECT d.Value, m.Company_ID, COUNT(*) Amount

FROM Detail d

JOIN Master m ON m.ID = d.Master_ID

GROUP BY d.Value, m.Company_ID

HAVING COUNT(*) > 1

) LOOP

RAISE_APPLICATION_ERROR(-20999, Rec.Amount||' duplicate values found in Detail for VALUE = '||Rec.Value||', COMPANY_ID = '||Rec.Company_ID);

END LOOP;

END AFTER STATEMENT;

END;

/

Now also changes of MASTER.COMPANY_ID are checked for uniqueness violations.

UPDATE Master SET Company_ID = 3 WHERE ID = 3; -- allowed because uniqueness is not violated UPDATE Master SET Company_ID = 1 WHERE ID = 3; -- fails beacuse combination of value and new company_id already exists

Now our solutions seem perfectly working and should be from a functional perspective.

What if concurrent sessions have changes in pending transactions that we cannot select to check?

We must ensure that at the uniqueness check in the AFTER STATEMENT section we can read the whole content of both tables, no matter if committed or uncommitted. Oracle has no way to read uncomitted data, so we need to use the serialization of transactions to ensure that there are not uncommitted changes of concurrent transactions at the check in the AFTER STATEMENT section of the trigger.

The simplest solution would be to use an exclusive lock on the master table as a global semaphore in both triggers.

This way, the results of a DML operation at Detail or Master are checked for uniqueness only after all concurrent transactions manipulating this tables are finished.

AFTER STATEMENT IS BEGIN LOCK TABLE Master IN EXCLUSIVE MODE; -- Wait until all competing transactions have finished ... do the uniqueness check ... END AFTER STATEMENT;

If you provoke uniqueness violations simultaneously in different sessions then the session with the last commit will get the error.

Global serialization of DML on a table may significantly downgrade performance.

Unfortunately, I have not yet found a waterproof solution with that trigger approach without global serialization, at least for insert DML.

There might be a possible performance problem: We're using a brute force check of the whole tables for each DML operation

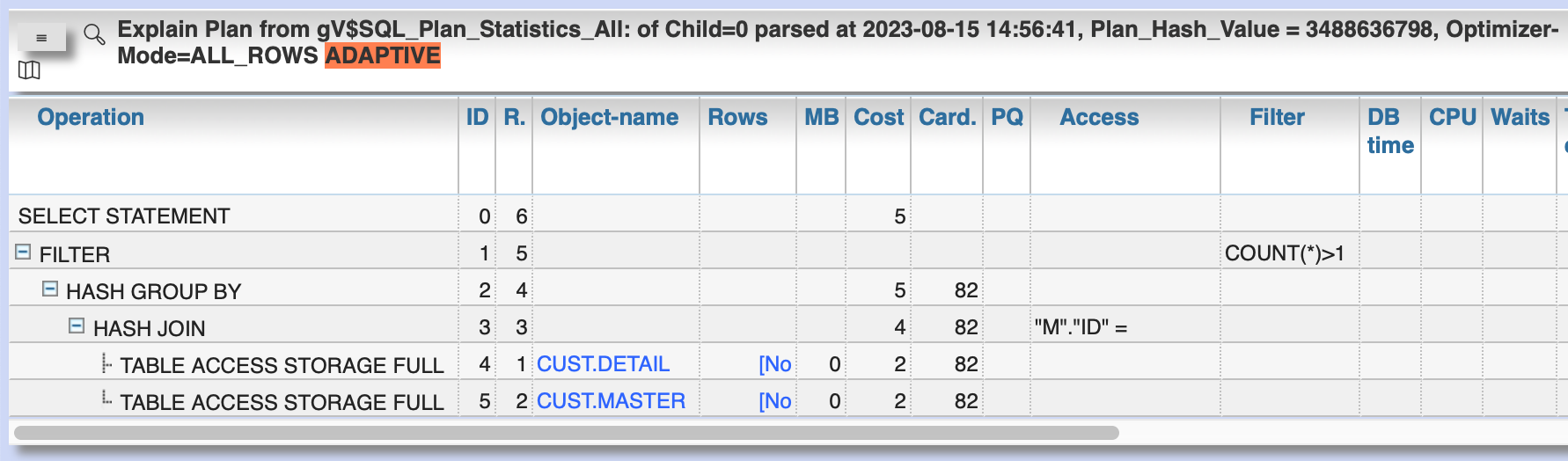

This is the execution plan of the check SQL for the current brute force solution:

A solution for this performance problem could be to set an index to DETAIL.VALUE and limit the records to be checked to those whose values are used by the DML operation. This is the trigger for table DETAIL after ajustment:

CREATE INDEX IX_Detail_Value ON Detail(Value);

CREATE OR REPLACE TRIGGER Detail_Value_Company_ID_Unique

FOR INSERT OR UPDATE OF Master_ID, Value ON Detail

COMPOUND TRIGGER

TYPE touched_values_type IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

touched_values touched_values_type;

BEFORE STATEMENT IS

BEGIN

touched_values.DELETE; -- Ensure no traces of previous calls

END BEFORE STATEMENT;

AFTER EACH ROW IS

BEGIN

touched_values(:new.Value) := 1; -- remember the value as key in associative array

END AFTER EACH ROW;

AFTER STATEMENT IS

BEGIN

LOCK TABLE Master IN EXCLUSIVE MODE; -- Wait until all competing transactions have finished

FOR i IN touched_values.FIRST .. touched_values.LAST LOOP

FOR Rec IN (SELECT /*+ USE_NL(d m) */ m.Company_ID

FROM Detail d

JOIN Master m ON m.ID = d.Master_ID

WHERE d.Value = i

GROUP BY m.Company_ID

HAVING COUNT(*) > 1

) LOOP

RAISE_APPLICATION_ERROR(-20999, 'Duplicate values found indexed for VALUE = '||i||', COMPANY_ID = '||Rec.Company_ID);

END LOOP;

END LOOP;

END AFTER STATEMENT;

END;

/

Now the execution plan looks like this and selects only the needed records:

Next question regarding performance: Is it really necessary to check for each affected DETAIL record against the COMPANY_ID in Table MASTER by traversing an nested loop join?

No: the check against table MASTER is needed only once for each combination of VALUE, Master_ID

So let us tell the DB to first identify the needed MASTER_IDs and then attach each MASTER record only once to ask for the COMPANY_ID:

SELECT /*+ USE_NL(d m) */ m.Company_ID

FROM (SELECT /*+ NO_MERGE */ Master_ID, COUNT(*) Amount

FROM Detail

WHERE Value = i

GROUP BY Master_ID

) d

JOIN Master m ON m.ID = d.Master_ID

GROUP BY m.Company_ID

HAVING SUM(d.Amount) > 1

Now the execution plan looks like this, selects only the needed records and each record only once:

Last question regarding performance: Is it really necessary to pick the table records by index rowid one by one in that case?

Not necessarily: for optimal performance you may enlarge the indexes and avoid the table accesses in the check SQL for both tables:

DROP INDEX IX_Detail_Value; CREATE INDEX IX_Detail_Value ON Detail(Value, Master_ID); CREATE INDEX ID_Master_ID_Company_ID ON Master(ID, Company_ID);

Now only the indexes are accessed for the check SQL:

Final solution: triggers for both tables

CREATE OR REPLACE TRIGGER Detail_Value_Company_ID_Unique

FOR INSERT OR UPDATE OF Master_ID, Value ON Detail

COMPOUND TRIGGER

TYPE touched_values_type IS TABLE OF NUMBER INDEX BY BINARY_INTEGER;

touched_values touched_values_type;

BEFORE STATEMENT IS

BEGIN

touched_values.DELETE; -- Ensure no traces of previous calls

END BEFORE STATEMENT;

AFTER EACH ROW IS

BEGIN

touched_values(:new.Value) := 1; -- remember the value as key in associative array

END AFTER EACH ROW;

AFTER STATEMENT IS

BEGIN

LOCK TABLE Master IN EXCLUSIVE MODE; -- Wait until all competing transactions have finished

FOR i IN touched_values.FIRST .. touched_values.LAST LOOP

FOR Rec IN (SELECT /*+ USE_NL(d m) */ m.Company_ID

FROM (SELECT /*+ NO_MERGE */ Master_ID, COUNT(*) Amount

FROM Detail

WHERE Value = i

GROUP BY Master_ID

) d

JOIN Master m ON m.ID = d.Master_ID

GROUP BY m.Company_ID

HAVING SUM(d.Amount) > 1

) LOOP

RAISE_APPLICATION_ERROR(-20999, 'Duplicate values found indexed for VALUE = '||i||', COMPANY_ID = '||Rec.Company_ID);

END LOOP;

END LOOP;

END AFTER STATEMENT;

END;

/

CREATE OR REPLACE TRIGGER Master_Value_Company_ID_Unique

FOR UPDATE OF Company_ID ON Master

COMPOUND TRIGGER

AFTER STATEMENT IS

BEGIN

LOCK TABLE Master IN EXCLUSIVE MODE; -- Wait until all competing transactions have finished

FOR Rec IN (SELECT d.Value, m.Company_ID, COUNT(*) Amount

FROM Detail d

JOIN Master m ON m.ID = d.Master_ID

GROUP BY d.Value, m.Company_ID

HAVING COUNT(*) > 1

) LOOP

RAISE_APPLICATION_ERROR(-20999, Rec.Amount||' duplicate values found in Detail for VALUE = '||Rec.Value||', COMPANY_ID = '||Rec.Company_ID);

END LOOP;

END AFTER STATEMENT;

END;

/

A similar SQL optimization as for the trigegr of table DETAIL can also be done for the trigger of table MASTER.

Thats it. If you've found a better solution or discovered any errors, please leave a comment.

What happens when you have multiple sessions inserting and updating and the trigger doesn't see the changes (uncommitted data) ? Multi-session solutions for this would require using a serialization mechanism like the dbms_lock package or possibly materialized views with check constraints.

ReplyDeleteCheers,

You are completely right. Covering concurrency for this solution requires serialization of concurrent sessions until end of transactions. A simple but not very efficient solutions is a global lock serializing all included transactions, here using the table Master for that purpose. A more efficient but also much more complex solution could be a separate semaphore table maintained by autonomous transactions from inside the trigger. This way serialization could be limited to transactions using the same values for the key columns.

Delete